Ten Forward at the 2018 GPU Technology Conference

Once a year, thousands of deep learning and graphics nerds flock to San Jose to NVIDIA’s cutting edge technology conference. Topics covered include advanced robotics, self-driving cars, and virtual reality. Ten Forward iOS and graphis developer and all-around nerdette Janie Clayton was able to attend NVIDIA's GPU Technology Conference and is reporting back about the trends and technologies that are shaping our world, giving a peek into the future.

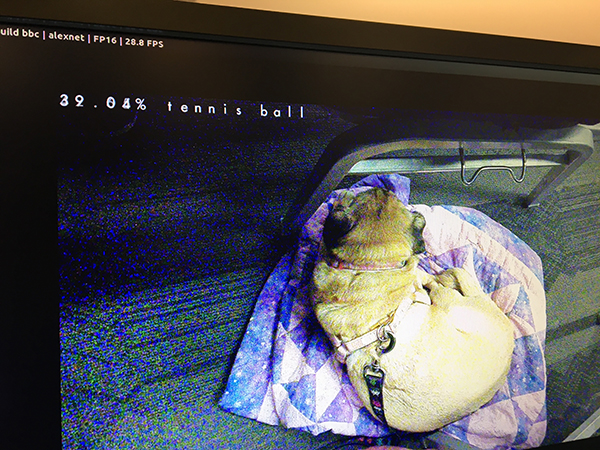

Machine Vision and Deep Learning

All of the innovations being displayed at GTC are built on the concepts of machine vision and deep learning. The foundational ideas behind machine vision involve thinking about how to teach a computer what something (like my service dog, Delia) looks like.

We humans can look at a dog and know what it is, but how would you express that to a computer whose entire brain is built upon ones and zeros?

We humans can look at a dog and know what it is, but how would you express that to a computer whose entire brain is built upon ones and zeros?

The way you would do that is you would take an image, which is made up of pixels, and figure out a mathematical representation of each pixel. You would then teach the computer that certain groupings of pixels represent a feature, like an edge or an eye or an ear.

It would then learn that collections of features compose a specific object, like a dog or a car or a lollipop. This is done by feeding incredibly large, labeled data sets to the computer and allowing it to break these down and look for patterns that make sense to it to learn what composes a dog.

Deep learning involves a similar process, by taking things like medical images or user data and looking for patterns it can apply to new data. Anything that can be represented numerically and has a large enough data set can be analyzed in this manner. It’s an art as well as a science, because you need to know how to tune/train the computer to look for information that is useful.

Autonomous Vehicles

One of the biggest trends over the last year in AI has been self-driving cars. Shortly before GTC, there was a tragedy involving an Uber autonomous vehicle hitting and killing a bicyclist who was walking their bike across the street.

This was a major topic of discussion among the experts at GTC. Uber didn’t have the best reputation even before this accident. Many people felt they were cutting corners, and that an accident such as this would push the technology back due to public backlash.

This was a major topic of discussion among the experts at GTC. Uber didn’t have the best reputation even before this accident. Many people felt they were cutting corners, and that an accident such as this would push the technology back due to public backlash.

If you watch the dashboard camera video, it looks pretty clear that the accident was unavoidable. The victim appears on the camera a split second before she is hit. It would be impossible for a human to react that quickly to that situation. However, the goal with autonomous vehicles is to be better than a human. There are a vast multitude of sensors that machines have at their disposal that allows them to sense more than we can. An infrared camera, for instance, would have picked up on the pedestrian long before a human would have been able to see them in the dark.

Autonomous cars would be a godsend to many elderly Americans who are unable to drive any longer, as well as persons with disabilities. The United States is, for the most part, car-oriented. There are small pockets in major urban areas with excellent public transportation, but this isn’t everywhere.

For example, one of the members of the Madison School Board, Nicole Vander Meulen, has cerebral palsy and is unable to drive. She is a lawyer and needs to live within walking distance of the courthouse. She needs to call for cabs any time she needs to get anywhere. She has spoken for years about how she can’t wait for self-driving cars to exist so that she can finally have the same independence that many of us take for granted.

Collaborative Virtual Reality

Virtual Reality has been having a hot streak recently as hardware has progressed to the point that the promise of VR is able to be fulfilled.

VR still requires pricey gaming headsets that most people can’t afford; as a result of this smaller market, many game developers are not targeting VR yet.

VR still requires pricey gaming headsets that most people can’t afford; as a result of this smaller market, many game developers are not targeting VR yet.

However, VR is beginning to be used in more than just the gaming industry. One such use case is collaborative VR.

Pretend you are an architect designing a building that will be built halfway across the world. Ideally, you would want to be on-location, but that isn’t always feasible. One solution being put forward by companies is collaborative VR. This would allow the architect and the builder to both enter a virtual environment where they could work together on a 3D model of the building without both people needing to be in the same physical location.

Luxury car manufacturers have also been exploring the use of virtual reality. BMW and Cadillac have used VR technology to create hyper-realistic virtual showrooms for their cars. If you want to customize a BMW and want to know how the beige leather would look, you can customize this in virtual reality with realistic texturing and lighting. You can also look at the internal mechanisms of the car in these simulated realities. This allows a far better user experience for potential customers to get exactly what they want without having to have every permutation of car available locally.

Robotic Automation

A major push in the manufacturing industry is the push to automation. Robotic systems can work faster and more accurately than most humans without the risk of accident.

Robotics systems like the one pictured on the left utilize machine vision to accurately place parts and packages on conveyor belts and on shelves. They also have safety systems to ensure that they do not hit any people and that they stop if something has gone wrong.

Robotics systems like the one pictured on the left utilize machine vision to accurately place parts and packages on conveyor belts and on shelves. They also have safety systems to ensure that they do not hit any people and that they stop if something has gone wrong.

There has been a huge concern about robots taking the jobs of humans, but as we move through the 21st century, there will simply be more repetitive, difficult work to do than there will be people to do it.

We are already reaching a crisis point in agriculture where there are not enough people to do the backbreaking work of harvesting the food we eat at a price that is sustainable by the market.

Agricultural automation isn’t here yet, due to the delicacy of the food we eat, but it’s a major target for future automation.

Security, Mobility, and Edge Computing

Right before the conference, a major news story was the Cambridge Analytics scandal.

Facebook and other large technology companies have vast quantities of information on their users. Anything that is kept in the cloud has the possibility to be hacked.

Facebook and other large technology companies have vast quantities of information on their users. Anything that is kept in the cloud has the possibility to be hacked.

Keeping information secure is vitally important not just for companies, but also for government entities. Many government agencies are not allowed to network their computers for security reasons.

There are also situations where you may simply not have access to a network, such as if you were underwater or out in space. Trying to figure out mobile and secure computing solutions for drones and soldiers is a growing field of concern for technology companies.

NVIDIA has released an embedded AI computing device, the Jetson TX2 (I picked one up at the conference). This device is intended for uses in things like drone deployment, or areas where you can’t or don’t want to connect to a network to access computing power for machine vision.

Currently a lot of processing is done on Amazon Web Service instances. NVIDIA has a lot of tools and frameworks through Jupyter notebooks to do deep learning training on the web, but there is a growing use case for doing work on device and avoiding the web. The web has a place in the world, but not everything can or should require a connection to the web to work.

We all walk around with mobile supercomputers in our pockets. As the author of a book on how to do mobile supercomputing on device, this area was of interest to me.

Takeaways

Deep learning and machine vision are rapidly becoming embedded in everything that we use and touch. I specifically wanted to attend graphics talks at GTC, and most of the graphics and VR talks I saw utilized deep learning models to gain better performance.

One interesting talk I saw was on using intelligent procedural generation to create art assets in games. Right now, there are artists all over the world working all day long to make realistic looking rocks and background characters that no one will notice in games that cost hundreds of millions of dollars to create.

One interesting talk I saw was on using intelligent procedural generation to create art assets in games. Right now, there are artists all over the world working all day long to make realistic looking rocks and background characters that no one will notice in games that cost hundreds of millions of dollars to create.

Being able to free these people up to only work on the specific things users will notice would drastically cut down on the development cost of AAA video games, and allow developers to focus on the things that make the game awesome.

This just goes to show that deep learning has implications for all industries, from medical to defense to even video games.

Medical imaging and healthcare are implementing VR and deep learning to advance our healthcare system and extend our lives. Tools such as Tensorflow are making the barrier to entry on using deep learning far lower than it has ever been. You don’t need to be a data scientist or even understand the algorithms being used to take advantage of advances in deep learning (just hire us!).

The future won’t be televised. It will be automated.

Janie is super-smart and can do all (er, most) of the development tricks you could dream of - and some you can't! - whether it's deep learning or augmented reality or photographing small service dogs. Interested in utilizing Janie's skills for the benefit of your idea or company? Contact Ten Forward Consulting for more information about how we can level up your business.